BinEgo‑360°: Binocular Egocentric-360° Multi-modal Scene Understanding in the Wild

Welcome to the BinEgo‑360° Workshop & Challenge at ICCV 2025. We bring together researchers working on 360° panoramic and binocular egocentric vision to explore human‑like perception across video, audio, and geo‑spatial modalities.

Overview

This half-day workshop mainly looks at multi-modal scene understanding and perception in a human-like way. Specifically, we will focus on binocular/stereo egocentric and 360° panoramic perspectives, which measure both first-person views and third-person panoptic views, mimicking a human in the scene, by combining with multi‑modal cues such as spatial audio, textual descriptions, and geo‑metadata. This workshop will cover but not be limited to the following topics:

- Embodied 360° scene understanding & egocentric visual reasoning

- Multi-modal scene understanding

- Stereo Vision

- Open‑world learning & domain adaptation

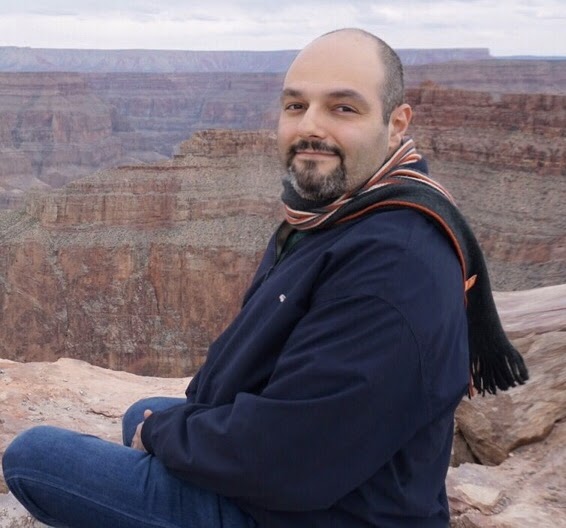

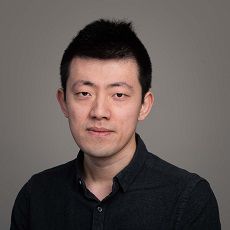

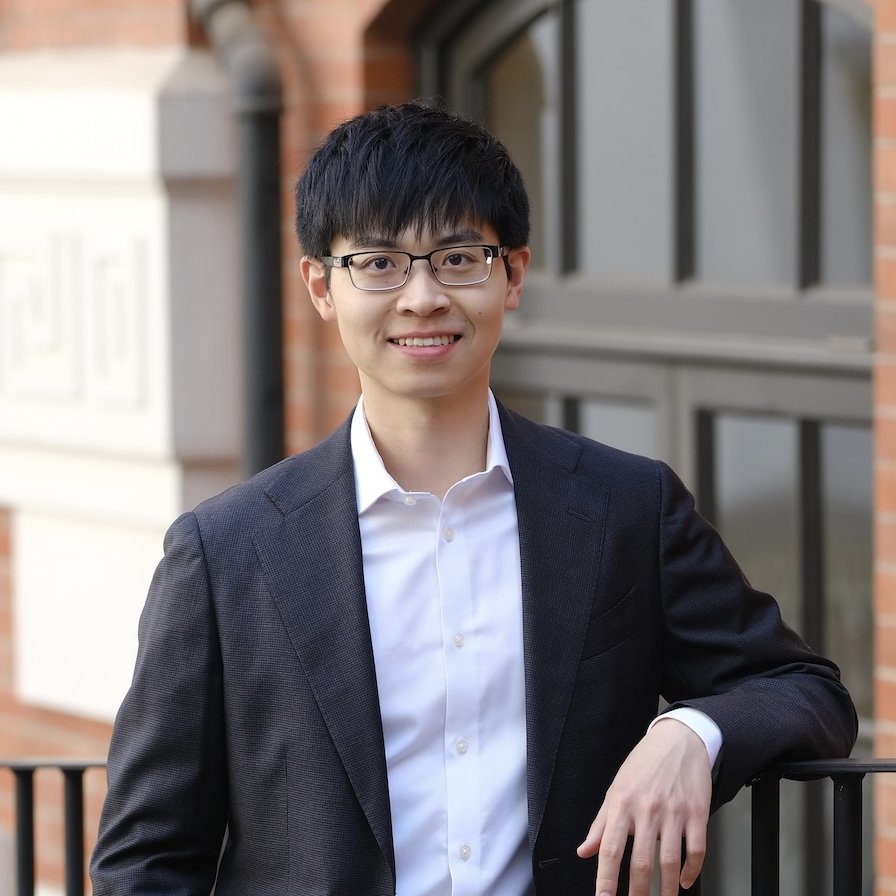

Keynote Speakers

Workshop Programme (Half‑day)

Room 306B · 19 Oct 2025| Time | Session |

|---|---|

| 09:00 – 09:30 | Opening Remarks |

| 09:30 – 10:05 | KeynoteBernard Ghanem — Towards Robust Multimodal Egocentric Video Understanding |

| 10:05 – 10:40 | KeynoteDima Damen — Video Understanding Out of the Frame: An Egocentric Perspective |

| 10:40 – 11:00 | Break & Poster Session |

| 11:00 – 11:45 | Invited Paper Presentations

|

| 11:45 – 12:20 | KeynoteAddison Lin Wang — 360 Vision in the Foundation AI Era: Principles, Methods, and Future Directions |

| 12:20 – 12:35 | Awards Ceremony & Concluding Remarks |

BinEgo‑360° Challenge

The challenge uses our public dataset 360+x for training/validation, and a held-out test set for the evaluation.

- Apart from the prizes, winners of the challenge will be invited to submit a paper/report to be included in the workshop proceedings and present at the workshop.

Important Notice:

New Final Submission Deadline ▶ 20 August 2025 (AOE)

We are delighted to let you know that the challenge deadline has been extended! Now you have more time to cook your good stuff!

Dataset Overview

- 2,152 videos – 8.579 M frames / 67.78 h.

- Viewpoints: 360° panoramic, binocular & monocular egocentric, third‑person front.

- Modalities: RGB video, 6‑channel spatial audio, GPS + weather, text scene description.

- Annotations: 38 action classes, temporal segments; object bounding boxes.

- Resolution: 5 K originals (5 760 × 2 880 pano).

- License: CC BY‑NC‑SA 4.0. All faces auto‑blurred.

Challenge Tracks & Baselines

1 · Classification

Predict the scene label for a whole clip. We follow the scene categories provided in the dataset.

- Input: 360° RGB + egocentric RGB + audio/binaural delay.

- Output: The scene label.

- Metric: Top‑1 Accuracy (in test set).

Baseline (All views and modalities use)

Top‑1 Acc: 80.62 %

2 · Temporal Action Localization

Detect the start and end time of every action instance inside a clip.

- Input: Same modalities as Track 1

- Output: JSON output for each detection:

{"video_id": ..., "t_start": ..., "t_end": ..., "label": ...} - Metric: mAP averaged over IoU ∈ {0.5, 0.75, 0.95}.

Baseline (TriDet + VAD)

| Metric | Score |

|---|---|

| mAP@0.5 | 27.1 |

| mAP@0.75 | 18.7 |

| mAP@0.95 | 7.0 |

| Average | 17.6 |

🏆 Competition Winners

Congratulations to the teams whose solutions topped the CLS and TAL tracks of the BinEgo-360 Challenge. Explore the podium finishers and their affiliations below.

CLS Track

1st Place

Xudong Cao, Lei Hei, Xudong Wang, and Liangqu Long

Corresponding Author: Liangqu Long

Team: Insta360 ML2

2nd Place

Rishav Sanjay

RV University Bengaluru

Team: EigenAdapt

3rd Place

Paul Ng’ang’a Kamau

Kenyatta University & Moringa School

TAL Track

1st Place

Duong Anh Kiet and Petra Gomez-Krämer

L3i Laboratory, La Rochelle University

Team: L3I

2nd Place

Paul Ng’ang’a Kamau

Kenyatta University & Moringa School

3rd Place

Chan U Wang 陳裕弘

University of Macau

Timeline (Anywhere on Earth)

- 1 Jun 2025Dataset & baselines release; Kaggle opens

- 20 Aug 2025Submission deadline

- Sep 2025Technical report and poster due

- 19-20 Oct 2025Awards & talks at ICCV 2025 workshop

Submission Rules

- Teams (≤ 5 members) register on Kaggle and fill in the team form.

- Up to 5 submissions per track per team – the last one counts.

- The winners need to submit a technical report and a poster to be presented at the workshop

- No external data that overlaps with the hidden test clips.

- Any submission after the deadline will not be considered.

Prizes & Sponsors

Ethics & Broader Impact

All videos were recorded in public or non‑sensitive areas with informed participant consent. Faces are automatically blurred, and the dataset is released for non‑commercial research under CC BY‑NC‑SA 4.0. We prohibit any re‑identification, surveillance or commercial use. By advancing robust multi‑modal perception, we aim to benefit robotics, AR/VR and assistive tech while upholding fairness and privacy.

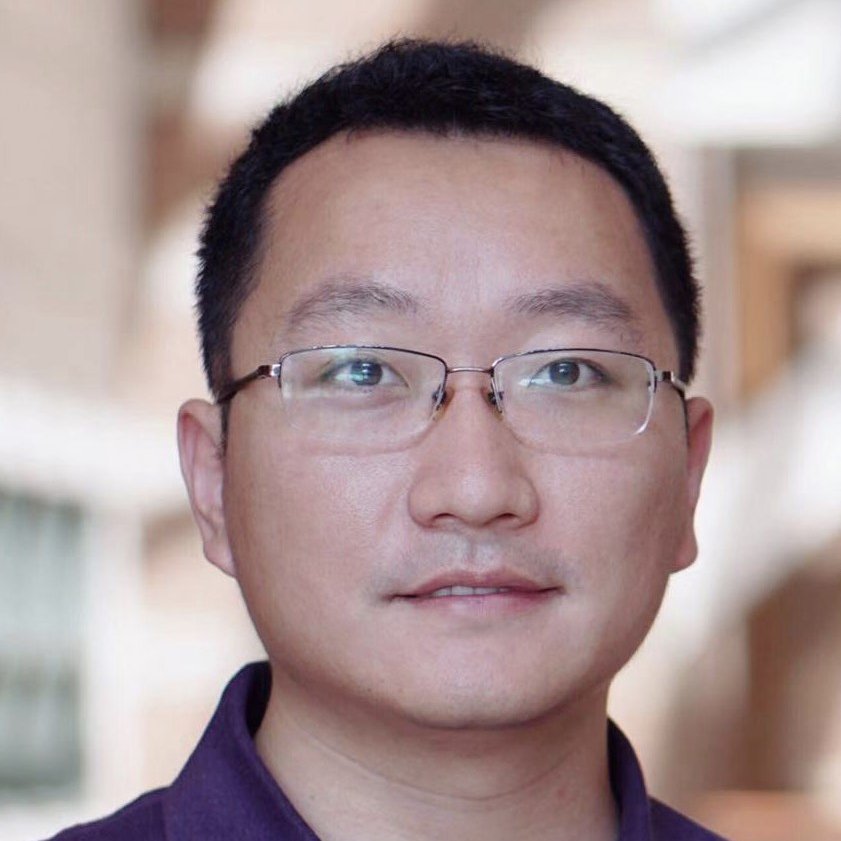

Organisers

Technical Committee:

Contact: j.jiao@bham.ac.uk

Sponsors

We gratefully acknowledge the generous support of our sponsors.

Publication(s)

If you use the 360+x dataset or participate in the challenge, please consider cite:

@inproceedings{chen2024x360,

title = {360+x: A Panoptic Multi-modal Scene Understanding Dataset},

author = {Chen, Hao and Hou, Yuqi and Qu, Chenyuan and Testini, Irene and Hong, Xiaohan and Jiao, Jianbo},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year = {2024}

}